CHALLENGE

Our customer is a top FMCG cosmetics and beauty products retail company. While dealing with many SKUs determined risks associated with collection planning, they have found that certain products were getting sold out even before fulfilling demands, whereas others stayed unsold for several months. It was triggering the costs significantly as well as affecting their profits. The company contacted X-Byte Enterprise Crawling to have a data-driven solution to their selling issues, as well as eliminate collection variations.

SOLUTION

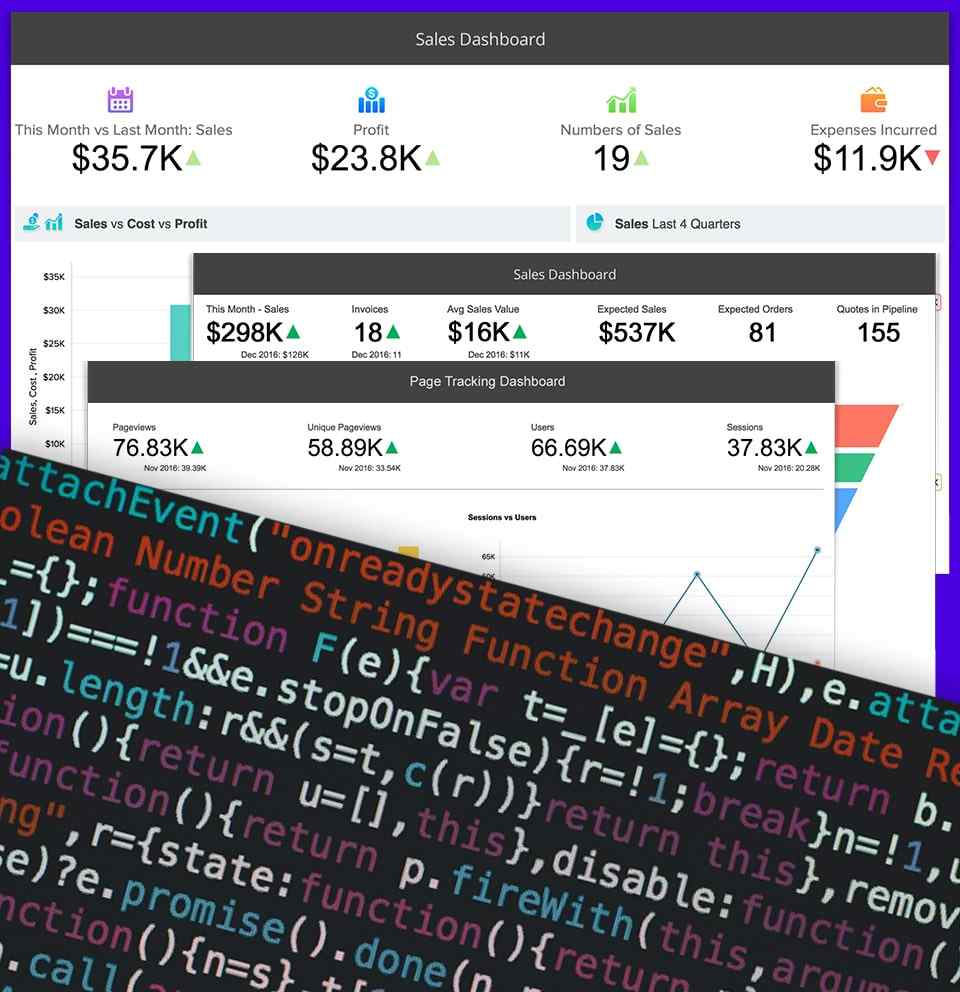

We have built customized web scrapers for 3 diverse objectives to establish data collection through doing this action and our data scraper was receiving data on the present product inventory. That left many products still available in supply. By implementing similar algorithms every day, we have created a steady data pipeline. In addition, we have created an administration panel, which could turn into a shared dashboard.

Now, the client can track all the changes are given in real as well as the data collected might be exported for more use. The last step was testing its performance as well as eliminating all the possible technical incorrectness. Following thorough efficiency checks, the entire procedure was applied countrywide. We have checked all the stocked products as well as their prices daily. All the checks were done using two ways, by writing straight on the website about the stock quantity.

Next, we added a casual amount to the cart, for instance, 2.000.000, this impelled the website to show an “error”, providing us insights into the real numbers accessible. To make care that the competitors’ sites do not get overloaded, we have given adequate intervals between the requests as well as stretched every scraping session to numerous hours. We were watchful to make sure that there were no serious loadings put on their competitors’ websites which might adversely affect our client’s business. We had also presented a proxy to save us from getting locked.

X-BYTE SOLUTION

Setting up the Crawler – The crawler was initially configured such that it could automatically scrape product price and essential data fields for present categories on a daily basis.

Data Template : A template was created utilizing data structuring based on the schema provided by the customer.

Delivery of Data : Without any manual input from either side, the closing data was supplied in an XML format through Data API regularly.

The dataset had all the information including comments, news timelines, most viewed articles, customer behaviour, etc. All of the scraped data was indexed using hosted indexing components, and search APIs were made available so that a client could get the results every few minutes.

RESULT

The assortment procedure of our client got improved, which resulted in augmented profits of 47% in the next 4 months, having an accuracy of 85% in demand forecasting. We began the problem just by using our data scraping mechanisms as well as building a customized web data scraper that follows the provided algorithms. Our web scraper utilizes proxy as well as time delays between queries to avoid a ban.

Our job was to create a streamlined gaining system depending on the actual market infrastructure, which was achieved with great success! Consequently, now the client has a much-improved oversight in the highly aggressive infrastructure, as well as improving the financial indicators.

After that, the client reorganized the company’s supply division by integrating the automated solution. A superior level of flexibility and certainty was achieved within two months only! Now, they can predict the demand for their products with an accuracy of 85%.