CHALLENGE

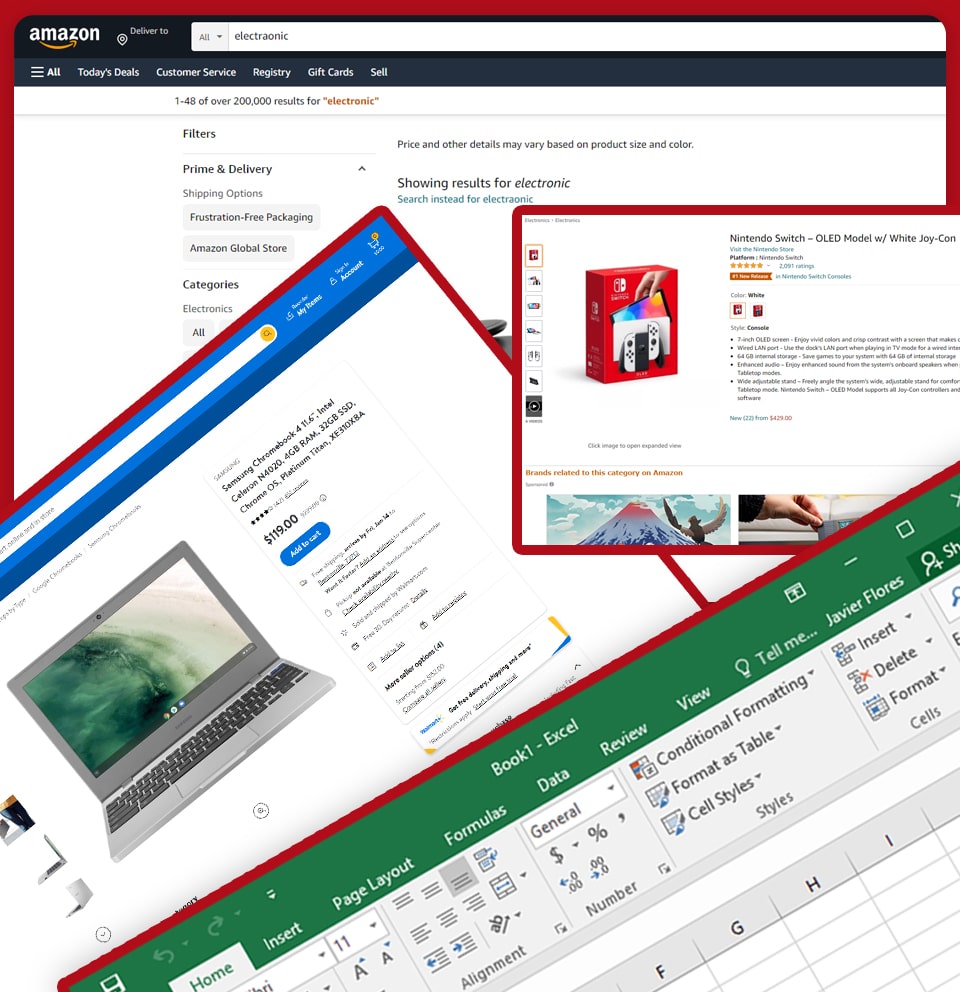

Two years back, X-Byte Enterprise Crawling got engaged in the long-term combined project with a key legal player within the USA market. The objective of this is to collect data from Amazon and Walmart. The data is associated with broad lists of certain products and keywords.

The client had hired X-Byte Enterprise Crawling to get solutions to cope with unauthorized sellers, regulate and grow online sales, accomplish MAP compliance, remove channel conflicts, as well as defend customer experience and brand value.

SOLUTION

We have developed different scrapers and created an admin panel for the customer. This has helped us to interchange data effectively. The web scraping was activated on keywords, as the client was uploading in the admin panel. Our work was to extract products and sellers’ data related to the keywords.

That helped us to extract 20 million reviews from Amazon as well as 4 million products from Walmart. Doing data extraction of big platforms like Amazon and Walmart is not an easy job to do because of the number of pages and products, as well as these websites, accept strict measures for limiting the practices of data scraping.

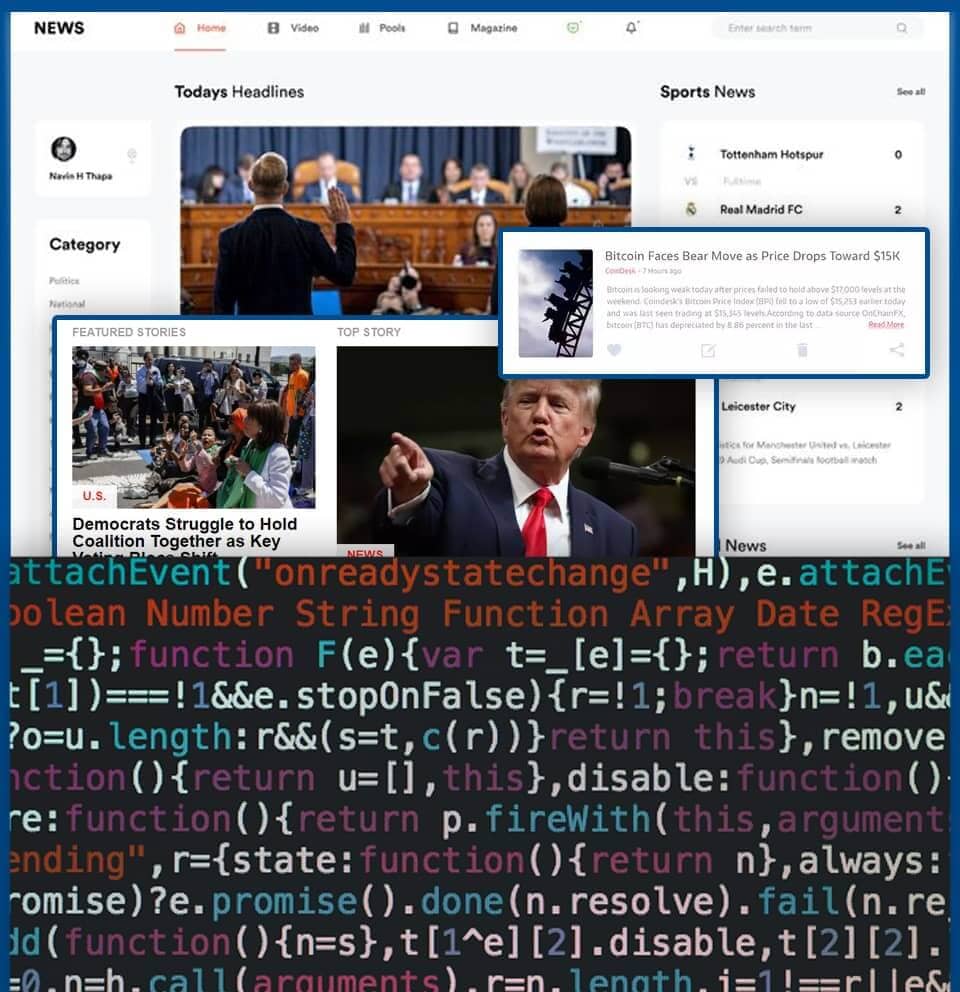

You can’t always be clear about when a process will deliver because the product, as well as catalog pages, vary in structure as well as can obscure the data scraper’s logic. The challenge here was to create a web crawler that can run smoothly with a huge amount of data as well as a variety of inputs.

This web scraper is required to be highly resistant as it was achieved through applying a blend of request scheduling methods as well as IP rotation. It was to dodge the recognizable bot behavior outlines. Here are some of the precautionary measures that we have followed during the procedure:

- A proxy pool changes every 24 hours

- IP randomization

- Keeping the selected IPs for scraping session

- IP addresses, which are within realistic proximities from a store

Walmart uses an AJAX method for the pagination switch so that we can make an algorithm taking a loading procedure as a cue to begin.

X-BYTE SOLUTION

Setting up the Crawler – The crawler was initially configured such that it could automatically scrape product price and essential data fields for present categories on a daily basis.

Data Template : A template was created utilizing data structuring based on the schema provided by the customer.

Delivery of Data : Without any manual input from either side, the closing data was supplied in an XML format through Data API regularly.

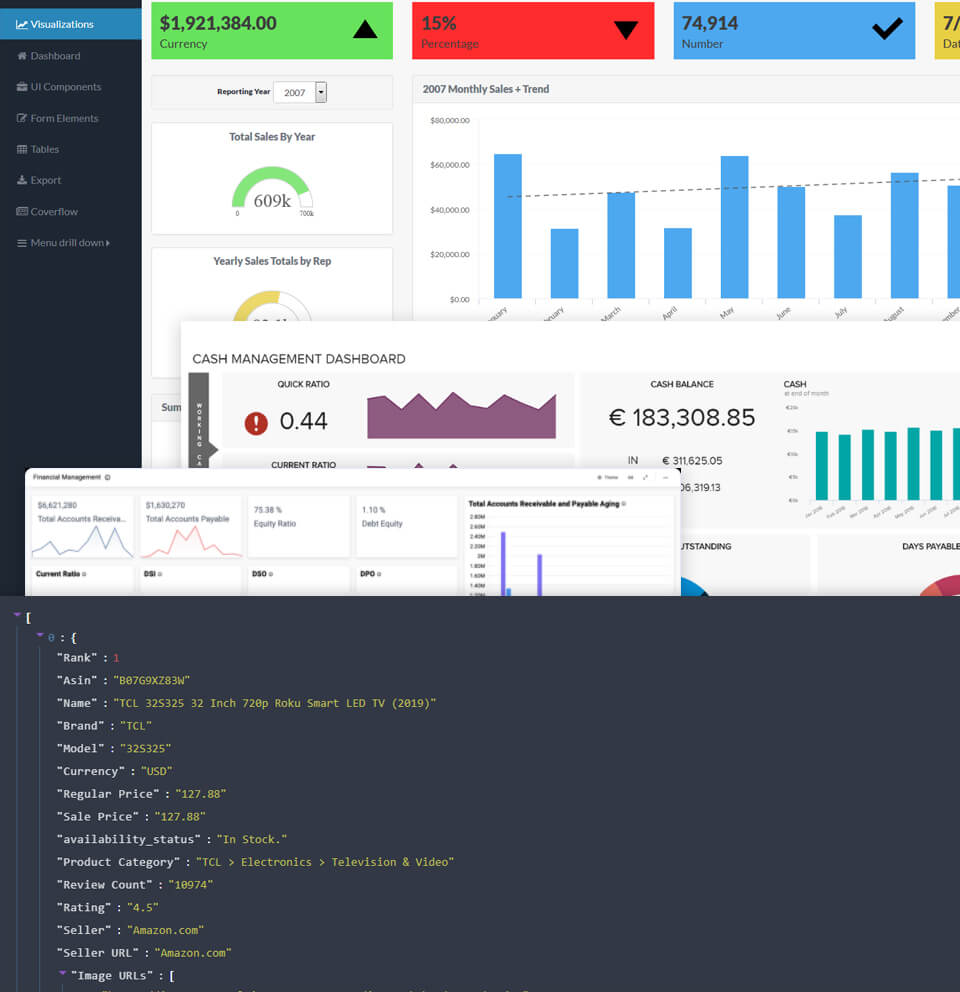

The dataset had all the information including comments, news timelines, most viewed articles, customer behaviour, etc. All of the scraped data was indexed using hosted indexing components, and search APIs were made available so that a client could get the results every few minutes.

RESULT

Our customer has successfully launched the eControl service and presently it is helping many US brands to use the data pipeline is utilized to allow the company’s legal examination of unfair sales practices.

Consequently, the customer has been utilizing the gathered data to pawn unfair competition for huge brands, as well as stop their corrosion as a result of checking prices. Working with X-Byte ensured helped them to get the steady flow of new and quality data for given keywords, products, as well as suppliers.