In this blog, we will help you use data scraping as well as a data visualization project. We would scrape e-commerce data from real e-commerce sites and then try to get more insights from that. The objective of this blog is to exhibit how to have product price data online as well as a few ways to analyze pricing data. We would also look for how price intelligence can make a huge difference for e-commerce companies while making any pricing decisions.

It is an easy procedure we will follow in this blog:

- Recognize data fields and sources

- Scrape and store data

- Study data

Recognize Data Fields and Sources

Websites

In the real-life project, you’d perhaps identify which sites you wish to have data from. For this blog, we are selecting a few well-known European e-commerce stores.

Data Fields to Extract

While extracting product data, we have a huge amount of data we might get from the e-commerce sites: product’s name, price, stock, product-specific attributes, category, reviews, etc. For now, we will concentrate on four data fields which have the possibility of giving us the most stimulating insights:

- Product’s Name

- Category

- Price

- Stock

Ethical Web Extraction

Before starting of writing the code for extracting data from a website, it’s very important to ensure that we are doing ethical scraping. Initially, we need to check a robots.txt file as well as observe if this permits us to visit pages we wish to have data from.

Example of robots.txt file:

User-agent: * Disallow: / User-Agent: * Disallow: /*.json Disallow: /api Disallow: /post Disallow: /submit Allow: /

A few things you can do to become acquiescent:

- Follow the rules

- Adjust crawling’s speed if required

- Recognize yourself with the UserAgent

- Do not damage the website

Scrape and Store the Data

It is a part where we scrape data from a website. We will use many modules of the Scrapy framework including Item, ItemLoader, as well as the pipeline. We wish to ensure that outputs are clean so that we can insert them into the database to do later analysis.

Scrapy Installation

We are utilizing Scrapy, a web data scraping framework, for the project. Installing Scrapy with the virtual environment is suggested so it doesn’t encounter different system packages.

Make a new folder as well as install virtualenv:

mkdir ecommerce cd ecommerce pip install virtualenv virtualenv env source env/bin/activate

Installing Scrapy:

pip install scrapy

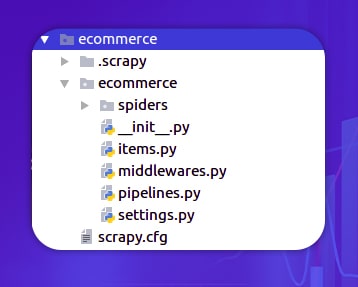

Make a New Scrapy Project

Now as we have installed Scrapy in the environment, we could make a newer Scrapy project:

scrapy startproject ecommerce

It will create a file structure:

Items

Formerly we would write spiders for every website, we need to make an item in items’ file that has formerly well-defined data fields. One item would characterize one product as well as hold its data.

class ProductItem(Item): product = Field() price = Field() category = Field() stock = Field()

Spider

All the spiders will be the same except for the selectors.

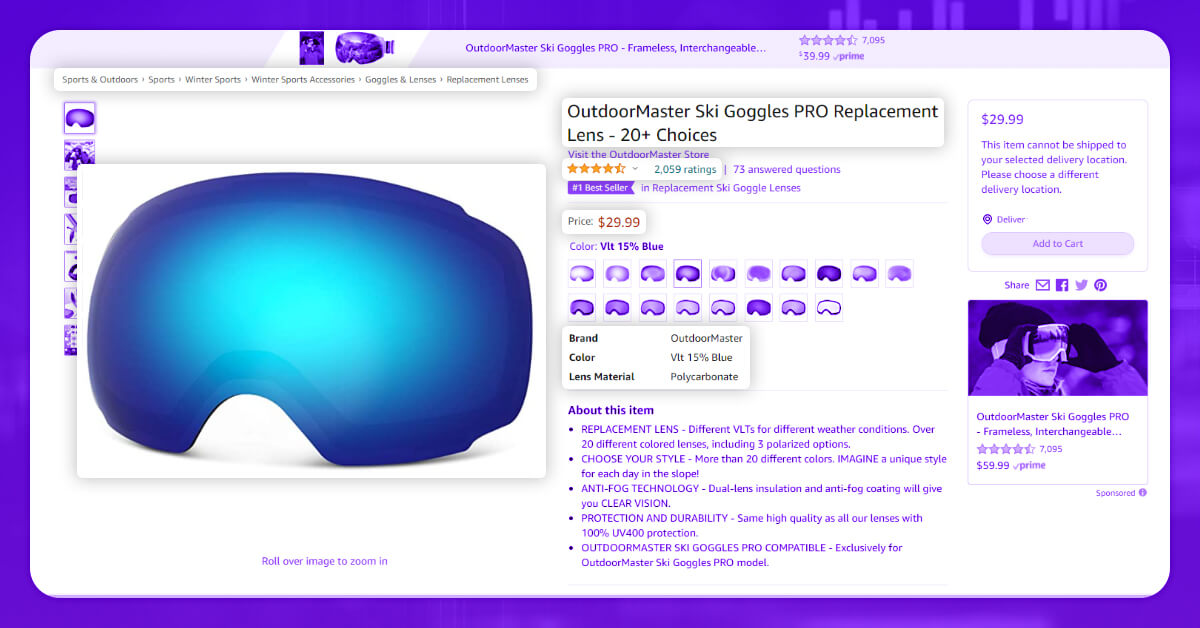

To make a spider, initially, we need to look at a website as well as its source codes, for instance:

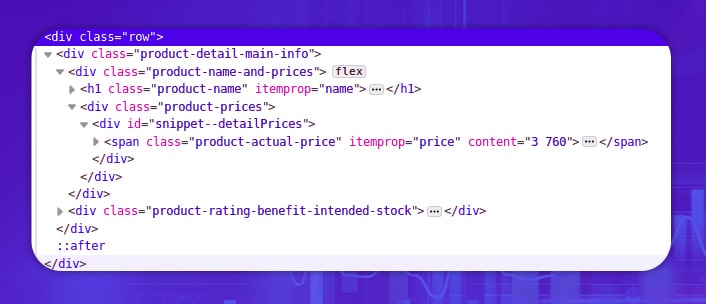

It is HTML of the one e-commerce site we are scraping as well as this part has the name as well as pricing information. One thing to search for is an itemprop feature. Many e-commerce websites utilize the schema. In a source code given, we get itemprop=”name” that contains product name as well as itemprop=”price” having product prices.

Choosing data fields depending on itemprop attributes provides us a superior chance that a data scraper won’t break in future whenever a website layout alters.

class Ecommerce(Spider):

name = "ecommerce"

start_urls = ["example.com/products/1", "example.com/products/2", "example.com/products/3", ]

def parse(self, response):

item_loader = ItemLoader(item=ProductItem(), response=response)

item_loader.default_input_processor = MapCompose(remove_tags)

item_loader.add_css("product", "h1[itemprop='name']")

item_loader.add_css("price", "span[itemprop=price]")

item_loader.add_css("stock", "span[itemprop=’stock’]")

item_loader.add_css("category", "a[data-track='Breadcrumb']")

return item_loader.load_item()

We are utilizing ItemLoader with the default input processor for removing HTML tags. We are choosing a category field from breadcrumb.

Making a Data Pipeline

If we wish to run any analysis about our data, we have to store that in some type of databases. For the project here, we are utilizing a MySQL database to store data. In case, you wish to utilize MySQL as you need to install MySQL-Python in case, this isn’t installed already:

sudo pip install MySQL-python

After that in Scrapy, we make a new class named DatabasePipeline with pipelines.py file:

class DatabasePipeline:

def process_item(self, item, spider):

return item

In the given class, we have many things to perform:

- Adding database connections’ parameters in a constructor

- Execute from_crawler method as well as find database connection details from settings.py

- Connect to a database whenever a spider begins

- Insert different data records in a database (one product at a time)

- While all are done, close a database connection

class DatabasePipeline:

# Add database connection parameters in the constructor

def __init__(self, db, user, passwd, host):

self.db = db

self.user = user

self.passwd = passwd

self.host = host

# Implement from_crawler method and get database connection info from settings.py

@classmethod

def from_crawler(cls, crawler):

db_settings = crawler.settings.getdict("DB_SETTINGS")

if not db_settings:

raise NotConfigured

db = db_settings['db']

user = db_settings['user']

passwd = db_settings['passwd']

host = db_settings['host']

return cls(db, user, passwd, host)

# Connect to the database when the spider starts

def open_spider(self, spider):

self.conn = MySQLdb.connect(db=self.db,

user=self.user, passwd=self.passwd,

host=self.host,

charset='utf8', use_unicode=True)

self.cursor = self.conn.cursor()

# Insert data records into the database (one item at a time)

def process_item(self, item, spider):

sql = "INSERT INTO table (field1, field2, field3) VALUES (%s, %s, %s)"

self.cursor.execute(sql,

(

item.get("field1"),

item.get("field2"),

item.get("field3"),

)

)

self.conn.commit()

return item

# When all done close the database connection

def close_spider(self, spider):

self.conn.close()

Theoretically, you can also hardcode the database connection details in a pipeline however we suggest you to put that in a settings file including that:

DB_SETTINGS = {

'db': "my_db",

'user': 'root',

'passwd': 'my_pass',

'host': '0.0.0.0',

}

As we only need to activate the pipeline in a settings file:

ITEM_PIPELINES = {

'ecommerce.pipelines.DatabasePipeline: 300,

}

Pricing Intelligence

We have concentrated on scraping e-commerce price data, and now it’s time to look at a few basic ways you could analyze and find actionable insights. This section will introduce some fundamental ways of analyzing pricing data and how to find actionable insights through it. We are using SQL queries and Pandas on the backend to retrieve data from a database. For generating charts on the front end, we are utilizing Google Charts.

Pricing History

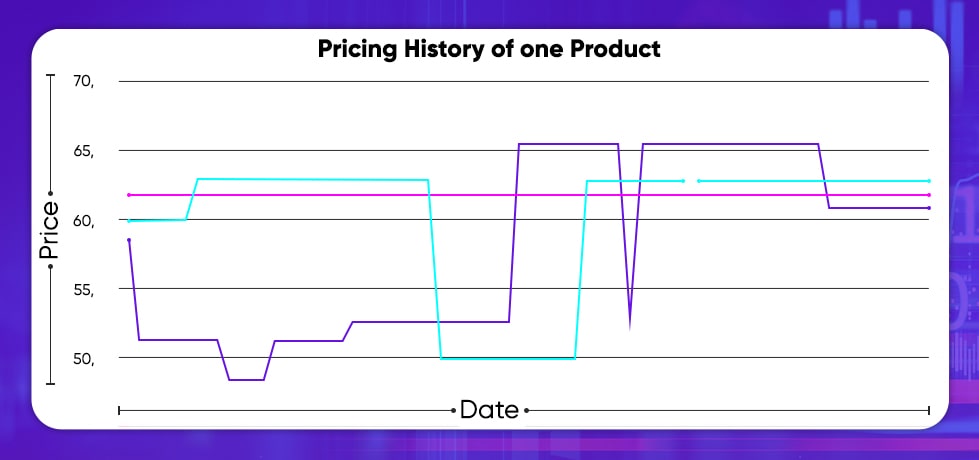

One significant analysis is the pricing history of a product. This shows how a product gets priced. This might be one way of helping determine the price strategy of the e-commerce store. Apparently, for that, you have to extract data frequently for a long time. However, when you have access to the data, you can see how the prices have changed or not in the past. You can also see what price strategy they utilize on vital shopping days including Black Friday.

In case, you go through the given history chart, you can get some good understanding about how you as well as your competitors are setting the pricing for a product. Depending on the past, you can estimate how competitors would change the future prices therefore, you can modify your strategy and make preparation for that.

Stocks

Amongst the main factors while shopping online is accessibility of chosen products. Might be we are ready to wait for a couple of weeks till a product we want to purchase is in the stock again however, mostly we need that in our hands as quickly as possible as well as might pay a bit more just for getting it quicker.

To benefit from these dynamics, we could extract the stock data from a product page and be informed if all the competitors are available for the provided product. Therefore, we can raise the prices.

Pricing Comparison

On a daily basis, the finest insight pricing intelligence can offer us is an overall view of the market and how the products fit into it. Using data scraping, we can have difficulty knowing how the key competitors are pricing similar products we sell.

Price Positioning

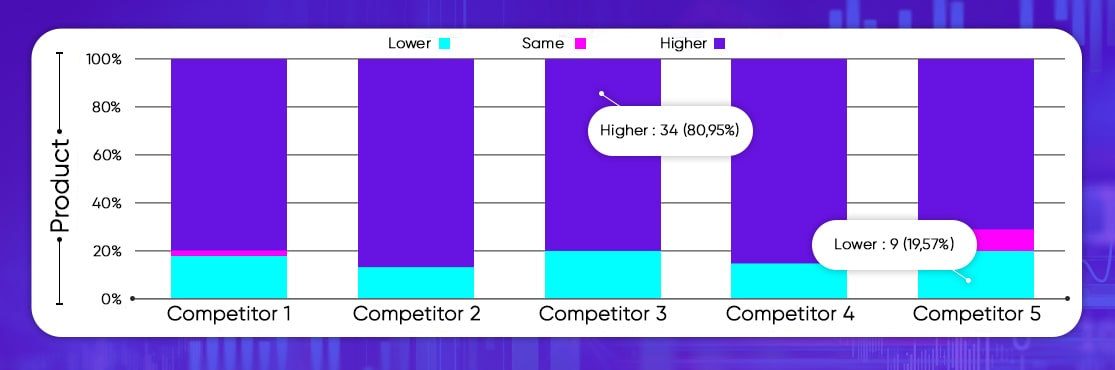

At higher levels, we could analyze how our products are lower priced, the similar, or higher than all the competitors. In the given chart, we have shown 34 products, which have higher prices than competitors 3 as well as 9 products having lower pricing than competitor 5.

For instance, we could need to position to get higher pricing than among the competitors or wish to get lower than additional one. This type of analysis could help you in adjusting prices consequently.

Category Analysis

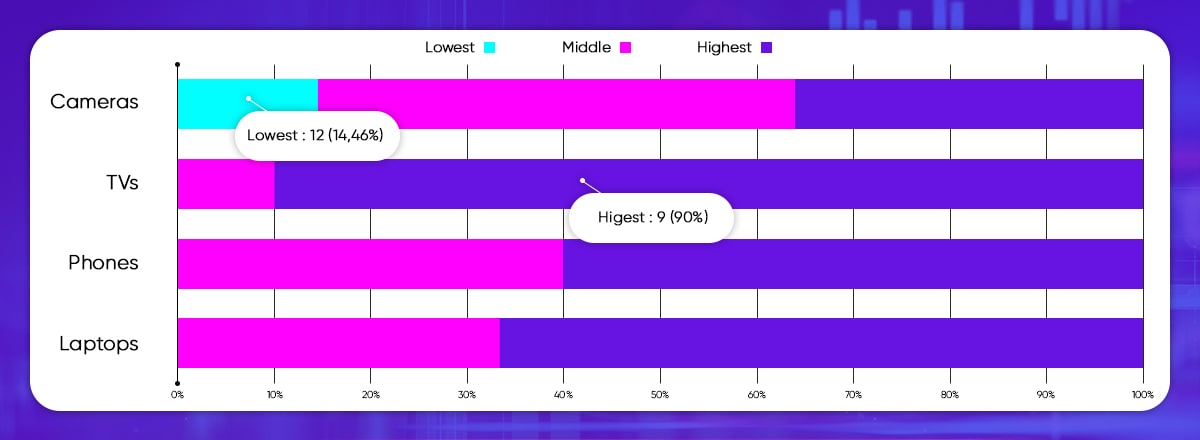

While we were writing a spider we scraped a product category also. That’s how we can group together different products depending on the category.

The chart here shows the pricing position compared with competitors in every product category. Here we get 12 products in the “Cameras” category with the lowest prices. In other categories, we are in the middle or at the highest pricing position.

Conclusion

Therefore, it is the procedure for extracting e-commerce websites and finding actionable insights from data with Python and a few visualizations. Initially, you plan about which data fields you need and from which websites. Secondly, you make web spiders for scraping and storing the data. (If you don’t wish to scrap with XPath or selectors, use the AI-based data scraping tool from X-Byte Enterprise Crawling. Finally, you can visualize data to know it and get business opportunities.

If you have e-commerce data-driven products and want help with data scraping, contact X-Byte Enterprise Crawling or ask for a free quote!